Overview of AMHAT

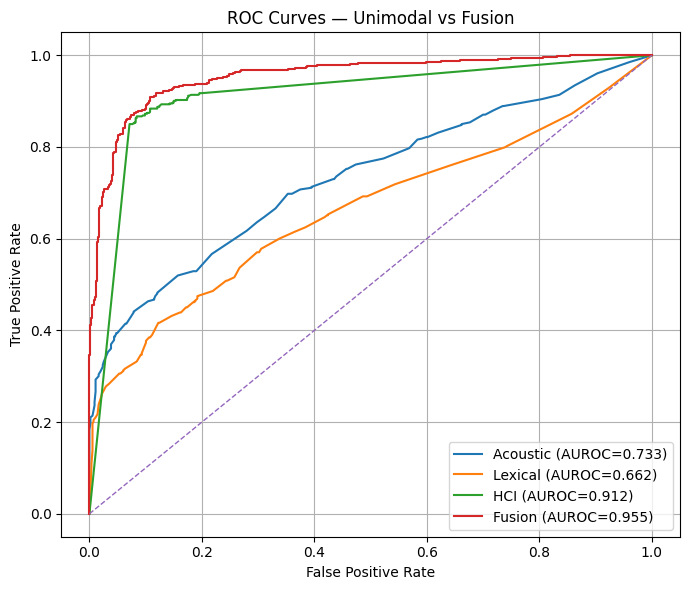

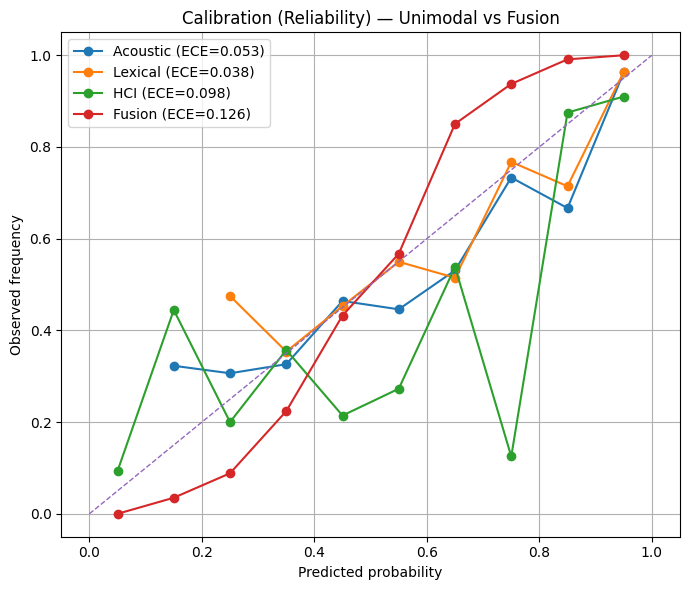

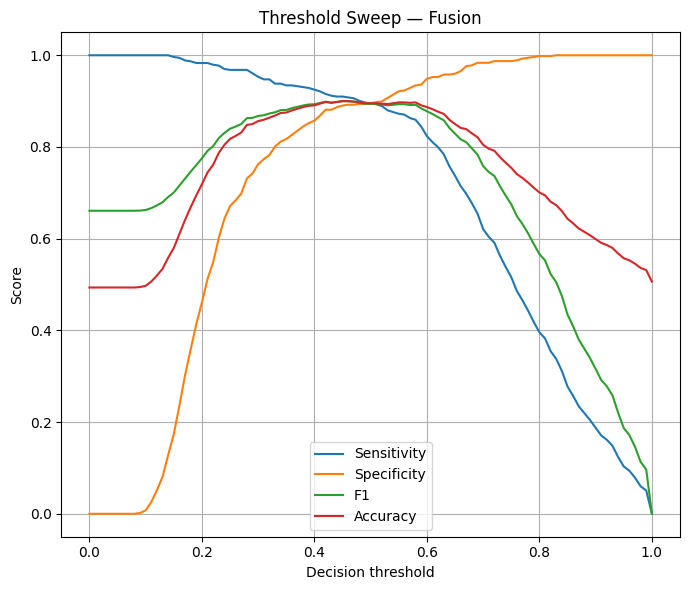

AMHAT is a multimodal assessment framework that integrates speech, text, and detailed human–computer interaction signals to screen for psychological stress in a privacy-preserving way.

The system focuses on offline, privacy-first processing: raw audio, video, and text are not stored. Instead, AMHAT retains only derived features that are necessary for modeling, which reduces risk while preserving predictive power.

AMHAT is designed for controlled laboratory studies and scalable deployment in real-world cognitive-aware applications, including mental wellness tools, digital therapeutics, and stress-aware interfaces.

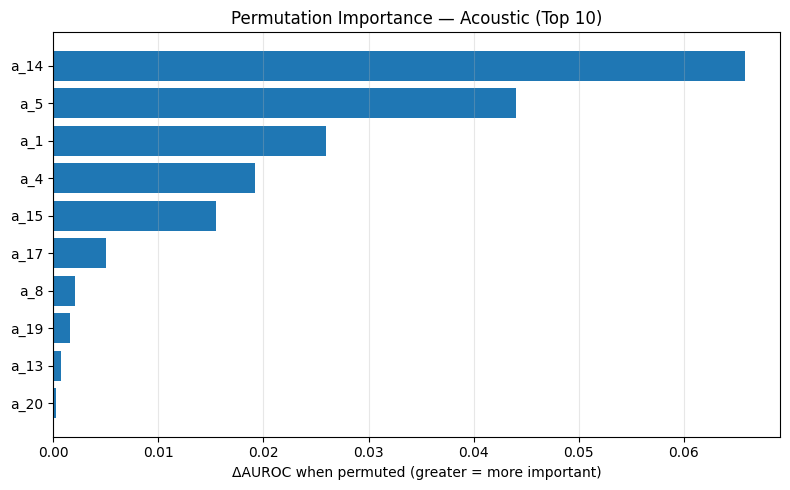

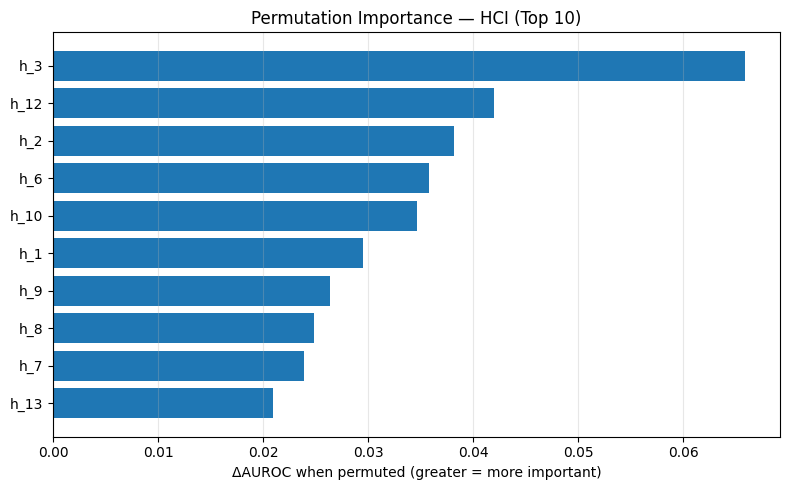

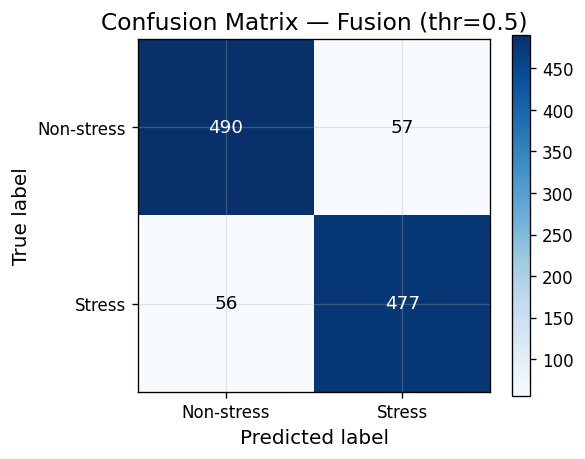

AMHAT consumes acoustic speech properties, linguistic features, and rich interaction signals such as keystroke timing and mouse dynamics. The modeling pipeline is uncertainty-aware and robust to missing modalities, which enables deployment in imperfect and heterogeneous environments without storing raw content.

AMHAT Project Team

Dr. Mehdi Ghayoumi

Principal Investigator, Lab Director

Prof. Tiffany Forsythe

Scientific Advisor

Cory Liu

Data Science Researcher

Elena M. Nye

Data Science Researcher

Dena Barmas

Data Science Researcher

Anthony Marrero

Data Science Researcher

Outputs and Sample Results

Publication and Poster

-

M. Ghayoumi, E. M. Nye, C. Liu.

“AMHAT: Multimodal Pipeline for Privacy-Preserving Stress Screening.” CSCI,16.5% acceptance rate, 2025.

Code, Data Pipelines, and Resources

The AMHAT codebase includes data ingestion utilities, feature extraction scripts, model training and evaluation pipelines, and experiment configuration files. Documentation and example notebooks support reproducibility and adaptation to new datasets.

The public repository also links to de-identified, synthetic examples that demonstrate AMHAT feature representations while preserving participant privacy.

View AMHAT on GitHubContact and Collaboration

We welcome collaborations with researchers, clinicians, and industry partners who are interested in privacy-preserving stress and mental health assessment, multimodal sensing, and human-centered AI systems.

For inquiries about AMHAT, datasets, or potential collaborations, please contact the project lead using the email below.